LLM VibeCheck v 1.0 (Suite)

A downloadable tool for Windows

Tired of the complex setup and guesswork involved in running local Large Language Models?

LLM V-C is a free, powerful manager for Windows that makes launching and controlling your own AI chat effortless.

✨ Our tool automatically scans your PC's hardware, tells you exactly which models will run smoothly, and gets you chatting with a single click. No more command lines, no more configuration files. Just pure vibes and powerful AI at your fingertips.

💻 Smart Hardware Scan: Instantly know which LLMs your computer can handle.

🔄 Always Up-to-Date: Get the latest and greatest models delivered automatically from our dynamic library.

🚀 One-Click Launch: Go from zero to chatting in seconds.

🌐 Access Anywhere: Connect to your local AI chat from your phone or any other device on your network.

🎛️ Total Control: Easily manage and shut down processes with our user-friendly interface.

Stop wrestling with setup and start vibing with your local AI. Download LLM V-C today!

Features

- Smart Hardware Scanning: Python-based core logic intelligently analyzes your system's capabilities (GPU, VRAM, RAM) to recommend the best-performing LLMs.

- Dynamic Model Library: The app fetches an up-to-date list of models from a central JSON almanac, so you always have access to the latest LLMs without needing to update the application.

- Lightweight & Portable: Built with a Flask backend and compiled into a single, standalone .exe using PyInstaller. No installation or dependencies needed.

- Optimized Local API: A fast and responsive UI powered by a local API that uses separate calls for initial hardware data and real-time status updates.

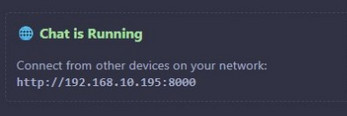

- Network Accessibility: Automatically displays the local IP address, allowing you to connect to your LLM chat from any device on your home network.

- Secure by Design: Features a server-side validation check to ensure that only legitimate copies of the app can access the model data.

| Published | 24 days ago |

| Status | Released |

| Category | Tool |

| Platforms | Windows |

| Author | Vibe_Coder |

| Tags | ai, llm-chat, llm-manger, llm-vibecheck, locall-llm, olama |

Download

Install instructions

LLM VibeCheck - The User Guide

⚠️Heads up:

– The .exe is unsigned, so Windows might show a warning.

Click “More info” → “Run anyway” — you’re good.

– Firewall may ask for permission — allow it so the app can open your browser.

Alright, so you fired up LLM VibeCheck. Nice! You're now holding the Swiss Army knife for running local LLMs with Ollama. This app is designed to figure out what your rig is made of, which models it can actually run, and give you an easy way to manage them all.

The Interface at a Glance

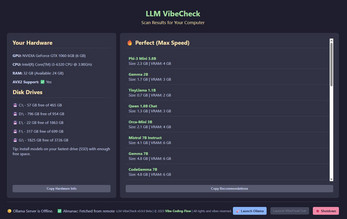

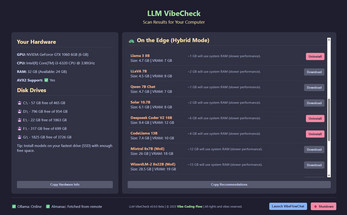

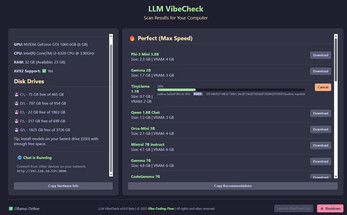

The Left Panel (Your Rig)

Your Hardware: This is where you'll see the key specs of your machine-your graphics card (GPU), processor (CPU), and system memory (RAM). The most important number here is your VRAM (video memory). Disk Drives: Shows all your drives and how much free space you've got.

Chat is Running: This block pops up only after you launch the chat. It shows you the network IP address, which you can use to connect from other devices on your Wi-Fi, like your phone.

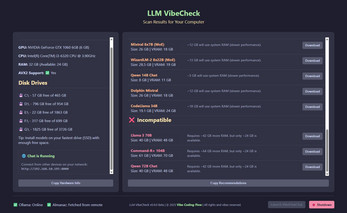

The Right Panel (Model Recommendations)

Perfect (Max Speed): Models in this list are a perfect match for your GPU. They'll run at max speed because they fit completely in your VRAM.

On the Edge (Hybrid Mode): These models are a little too beefy for your GPU alone. They'll work by using your VRAM and system RAM together. It'll be slower, but totally playable.

Incompatible: These models are just too massive, even for your system RAM, or they need special CPU instructions (like AVX2) that you don't have. Trying to run them probably won't end well.

The Footer (The Control Panel)

Statuses (Left Side): Here you'll see the status of the Ollama server (installed, online, offline) and the

Almanac status: (telling you if the model list was fetched from the website or a local backup).

Buttons (Right Side): Your main action buttons for managing everything.

How to Use It

Get the Lay of the Land. First thing's first, check out the left panel. Make sure the app has correctly identified your hardware and disk space.

Check Your Ollama Status. In the bottom-left corner, you should see Ollama: Online. If not, use the Launch

Ollama button to get it running.

Pick a Model. Check out the recommendations on the right. To get started, it's best to pick something from the "Perfect" category.

Manage Your Models. Every model has a button next to it:

Download: Click this to start downloading a model. A progress bar will appear in the middle of the widget.

Uninstall: This appears if a model is already installed. Click it to delete the model and free up space.

Cancel: Appears during a download.

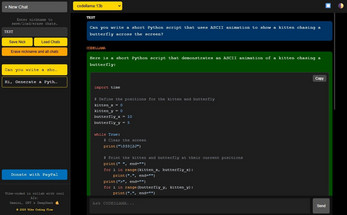

Fire up the Chat! Once you have at least one model downloaded, hit that Launch VibeFlowChat button.

Key Features

Launch Ollama: If the Ollama server isn't running, this button will fire it up in the background for you.

Launch VibeFlow Chat: Boots up the separate chat application. The button grays out while the chat is running to stop you from launching a bunch of copies.

Shutdown: Pops up a menu where you can pick and choose which processes to stop: the Ollama server, the chat app, or LLM VibeCheck itself.

Copy Hardware Info / Copy Recommendations: These buttons copy a clean, text-based report of your hardware or model recommendations to your clipboard. Super handy for sharing.

Troubleshooting & FAQs

" Ollama Not Found": This means you don't have Ollama installed. Grab it from the official website and get it set up.

" Ollama Server is Offline": Ollama is installed but not running. Just hit the Launch Ollama button.

The chat app can't be found: Make sure you've downloaded VibeFlowChat and placed it in the VibeFlowChat folder right next to the LLM VibeCheck .exe, just like the config.json file expects.

Error while downloading a model: Check your internet connection and make sure you have enough free disk space (the required space is listed in the model's description).

That's everything you need to know! You're all set to dive into the world of local AI. Good luck, and have a good vibe!

Leave a comment

Log in with itch.io to leave a comment.