VibeFlow Chat 2.0 - Local AI Chat

A downloadable tool for Windows

Your AI. Your Rules. Your Hardware.

☁️ Forget cloud-based chats.

🧠 VibeFlow Chat 2.0 turns your PC into a powerful, private AI hub.

🤖 Run multiple LLMs at once, switch between them on the fly,

🌐 chat with your own documents, and access it all from any device on your network.

Remember when AI was about exploration and freedom?

🛠️ VibeFlow Chat 2.0 brings that spirit back. We rebuilt our chat from the ground up to create the ultimate personal AI hub that lives entirely on your machine.

🎯 VibeFlow Chat 2.0 isn't just a window to a single model; it's a command center for your entire local AI arsenal. juggling: Load up Llama, Mistral, and Gemma simultaneously.

⚔️ Pit them against each other in the same conversation.

📈 Feed them your own documents and get insights no cloud service could ever offer.

✨ With a sleek new interface, a powerful plugin system, and seamless access from any device on your Wi-Fi, this is the AI experience you've been waiting for.

🚫 No subscriptions, no data mining, no limits.

💪 Welcome to Beast Mode.

Features:

- Multi-Model Juggling: Load and run several different LLMs at the same time. Switch between them instantly within a single chat to compare responses and find the best tool for the job.

- Your Personal AI Server: Access your chat hub from your phone, tablet, or laptop — any device on your local network. Your conversations follow you, saved securely on your own machine.

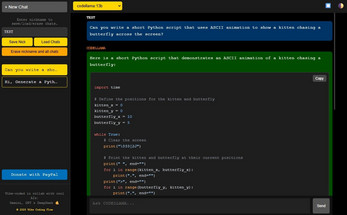

- Sleek Pro UI: A completely redesigned interface built for power users. Enjoy full Markdown support, syntax highlighting for code, and easy one-click copy for developers.

- Future-Proof Plugin System: VibeFlow Chat is now a platform. The new plugin architecture allows for massive future expansion, from connecting to online services to adding custom local tools.

- 100% Private & Offline: Your models, your data, your conversations. Everything runs and is stored locally. No internet connection required, no Big Tech snooping on your data.

| Status | Released |

| Category | Tool |

| Platforms | Windows |

| Author | Vibe_Coder |

| Tags | llm-chat, local-llm-ecosystem, ollama, vibeflow-chat |

Download

Install instructions

VibeFlow Chat 2.0: User Guide

⚠️Heads up:

– The .exe is unsigned, so Windows might show a warning.

Click “More info” → “Run anyway” — you’re good.

– Firewall may ask for permission — allow it so the app can open your browser.

Step 1: Getting Started

1.1. Install the Engine: Ollama

VibeFlow Chat requires the Ollama service to function.

- Navigate to the official website: ollama.com.

- Download and install the version for your operating system.

- After installation, ensure the Ollama service is running (on Windows, you'll see the llama icon in your system tray).

1.2. Install & Run VibeFlowChat.exe

- Download the latest Windows release as a .zip archive from: vibe-coding-flow.com/downloads/vibeflow-chat-v-2-0/

- Extract the archive to a convenient folder.

- To Start: Run VibeFlowChat.exe. A console window with server logs will appear, and a browser tab will open automatically.

- To Stop: Close the black console window.

(Note: Linux and macOS versions are in development.)

1.3. The Console and LAN Access

- The console window shows all server activity and your LAN address.

- Example: http://192.168.1.105:8000

- Open this in any browser on the same Wi-Fi network to access VibeFlow Chat.

Step 2: Core Features

- Multi-Model Conversations: Switch between models in the same chat.

- Server-Side History: Stored in a local SQLite database.

- Nickname-Based Profiles: Retrieve chats by nickname.

- Full Chat Management: Create, load, or delete chats.

- Clean & Responsive UI: Optimized for desktop and mobile.

- Markdown & Code Highlighting: One-click copy.

Step 3: Recommended Models

For Standard PCs & Laptops (4-8GB VRAM)

- The All-Rounder: llama3:8b - Command: ollama pull llama3:8b - VRAM: ~5GB

- The Coder: codegemma:7b - Command: ollama pull codegemma:7b - VRAM: ~5GB

- The Super-Lightweight: gemma:2b - Command: ollama pull gemma:2b - VRAM: ~1.5GB

For High-End Workstations (16-24GB+ VRAM)

- The Pro Coder: deepseek-coder-v2 - Command: ollama pull deepseek-coder-v2 - VRAM: ~10GB

- The Heavyweight Conversationalist: deepseek-v2 - Command: ollama pull deepseek-v2 - VRAM: ~16GB

For "Monster" Rigs (48GB+ VRAM)

- The Ultimate Coder: deepseek-coder:33b - Command: ollama pull deepseek-coder:33b - VRAM: ~20-22GB

Step 4: Performance & Multi-User Support

By default, Ollama processes one request at a time.

- 8-16GB VRAM: Try OLLAMA_NUM_PARALLEL=2

- 24GB+ VRAM: Try 3 or 4

To configure (Windows):

- Create a system environment variable named OLLAMA_NUM_PARALLEL with your value.

- Restart the Ollama service.

Wishing you an excellent vibe while using VibeFlow Chat!

Leave a comment

Log in with itch.io to leave a comment.